First posted on the ORIGINal Thoughts Blog

Founder and CEO, DataSeer

Vancouver, BC

Email: tim@dataseer.ai

LinkedIn: linkedin.com/in/drtimvines/

Principle, Lindsey Morton Communications

Email: lindsayclaramorton@gmail.com

LinkedIn: linkedin.com/in/lindsaymorton/

Bluesky: @lindseeeeeee.bsky.social

Partnerships Director, DataSeer

Philadelphia, PA

Email: adrian@dataseer.ai

LinkedIn: linkedin.com/in/adrianstanley13

Bluesky: @adrian13.bsky.social

Take Home Points:

- AI can make transparency in research practical, scalable, and achievable.

- AI can offer signals, indicators, or even scoring on submissions to help filter articles needing more attention. One can also compare scores and signals across different AI tools.

- Seamless integration into workflows ensures researchers and editors comply with policy without additional burden or cost.

- Recognizing excellence and addressing errors collaboratively fosters a stronger research culture.

- Partnerships among stakeholders and AI developers are essential to drive systemic change.

- Using AI proactively safeguards the integrity of scholarly publishing while elevating its standards.

The benefits of sharing research data are well-documented: improving understanding, enhancing research integrity, and enabling reproducibility and reuse (UNESCO 2023). Data sharing maximizes the impact of scholarly work. However, while the value of transparent research practices like data sharing, code sharing, and preprinting is widely recognized, implementing these practices consistently and effectively remains a significant challenge.

Leading publishers have policies to promote data sharing. For instance, Taylor & Francis and Springer Nature have implemented multiple levels of standardized data-sharing policies across their journals. Similarly, the PLOS journals require authors to make all data underlying their findings fully available without restriction at the time of publication.

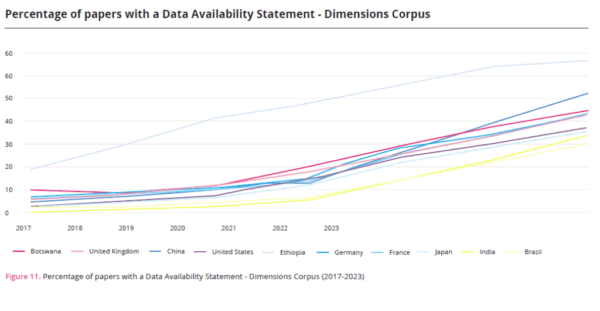

Despite these policies and year-on-year improvements, “The State of Open Data 2024,” a special report from Figshare, Digital Science, and Springer Nature, highlights that bridging the gap between policy and practice remains challenging. The report emphasizes the need for continued efforts to align researcher behaviors with established data-sharing policies to fully realize the benefits of open data.

Image courtesy of The State of Open Data 2024 report.

AI in Scholarly Communication: Transforming Transparency

AI has revolutionized scientific research, enabling large-scale, cross-disciplinary studies tackling challenges like climate change and public health. These same technologies can be equally transformative in scholarly communication.

Tools like DataSeer Snapshot, Clear Skies’ Papermill Alarm, and plagiarism detection software such as iThenticate, demonstrate AI’s ability to bridge the gap between theory and practice. By analyzing metadata and full text, flagging problematic submissions, and supporting compliance with funder mandates and journal policies, AI can make research transparency actionable and achievable.

AI publishing tools help us move transparent practices from aspirational ideals to standard operating procedures, enhancing research quality and integrity. In other words, think of using these tools like having an extra editorial assistant—one focused solely on research integrity, who consistently completes tasks and gives reasoned, well-documented explanations for their decisions. A key point is if journals aren’t using tools like this in the next 1-2 years, they are likely to fall behind key competitor journals.

Three Pathways to Change

AI supports research transparency and integrity in editorial offices through three key mechanisms: understanding, integration, and intervention.

1. Understanding: Benchmarking and tracking progress

AI enables unprecedented insights into research communication norms across journals, institutions, and disciplines. By consolidating metadata and overcoming inconsistencies, AI allows us to:

- Benchmark current practices

- Identify areas for improvement

- Measure the impact of interventions

For example, by leveraging reports generated by AI, publishers, funders, and institutions can track compliance with mandates and identify successful practices that can be scaled across the industry. Two excellent examples are the PLOS Open Science Indicators and Silverchair Sensus funder reports.

2. Integration: Meeting researchers where they are

Researchers are primarily focused on conducting research; any additional requirements must be seamlessly integrated into existing workflows to maximize uptake. AI tools embedded in submission systems—such as flagging missing research data links and the presence of well-written data availability statements during manuscript submission—make it easier for researchers to comply with transparency standards without disrupting their primary work. In the absence of integrated tools, checking for open data becomes yet another task that the Editorial Office has to take on, often with limited time and limited expertise in the various data types collected at that journal.

The efficiency and effectiveness of automation and direct system integration, as opposed to manual curation by editorial staff, underscores the importance of partnerships in creating change. When tools like DataSeer Snapshot are embedded into a journal’s submission platform, or upstream or downstream workflows, policy compliance feels simpler for authors and editors alike, becoming a standard component of submission and assessment, rather than an adjunct.

3. Intervention: Using the carrot, stick, and helping hand to coach researchers to success

The idea that researchers can be motivated to comply with policies with only carrots (i.e., rewards) and sticks (i.e., punishments) is outdated. A third, and perhaps more effective approach, is accountability: meeting authors at their manuscript and pointing out any deficiencies.

- The carrot: Rewarding researchers who demonstrate transparency through recognition programs, such as certificates or tenure-friendly acknowledgments, can motivate compliance.

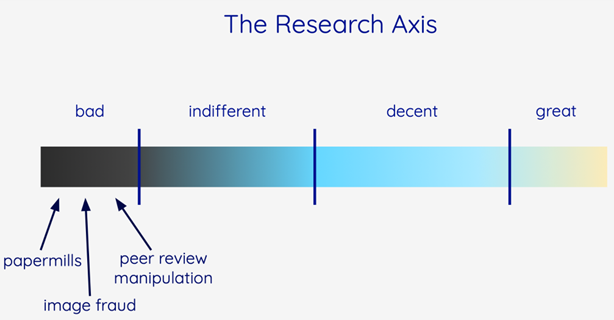

- The stick: Detecting and addressing research misconduct, such as figure manipulation or plagiarism, helps maintain the integrity of the scientific record.

- Accountability: Most errors are unintentional. AI tools provide automated guidance to authors and editors during the submission process, helping them meet consistent standards while freeing editors to focus on more complex and strategic parts of their job.

Balanced intervention not only improves compliance and the published research but also fosters a lasting culture of excellence in research practices. Over time, researchers and journals will gradually progress from less rigorous and transparent communications practices to more collaborative, open, and reproducible behaviors that sharing mandates are designed to encourage.

Most interventions focus on identifying and stopping bad actors. But providing support and guidance and recognizing positive behaviors are just as vital to creating wide-spread change.

Researchers may start with different expectations and norms around data-sharing, depending on their discipline and training. Tailored interventions can lead them up the research integrity ladder at their own pace, gradually improving transparency and research quality in a sustainable and lasting way.

Harnessing AI as a force for good

While AI has raised concerns about enabling large-scale research fraud, it is also a powerful tool for promoting transparency and integrity. When informed by editorial ethics and human expertise, AI can:

- Identify potential ethical concerns quickly and effectively

- Provide tailored, real-time support to authors and editors

- Celebrate researchers who exemplify integrity

- Deliver actionable insights to inform future decisions

By harnessing AI to their own ends, publishers, funders, and institutions can collectively build a more transparent, trustworthy and policy compliant research ecosystem.

Conflicts of Interest:

- Tim Vines is the founder and CEO of DataSeer

- Lindsay Morton is an independent communications consultant. DataSeer is one of her clients.

- Adrian Stanley is an independent consultant, and DataSeer is one of his clients.