By Vanessa Vaughn, Senior Director, Content Services

In a recently published post on this blog, our Editorial Director Joseph Berman, shared some of his insights on what it takes to create effective assessments, delving into his years of K-12 math and science experience to reveal some essential best practices, top tips and dos and don’ts.

He concluded the article with his number one piece of advice “always review your work before considering it final.” This golden mantra should remain true for anybody in the business of preparing and developing assessments, particularly in a world where AI is likely to play a more significant role in the future.

As we constantly evolve our offering to customers, we at KGL have been taking an in-depth look at the current state of different AI applications and usages—how they’ve improved, where they might work best and how they can be of benefit in our day-to-day business, but also on the flip side—what are their limitations and to what extent can we rely upon them.

Recently, the KGL Smart Lab team gathered to explore and analyze specifically how a custom GPT (Generative Pre-trained Transformer) can be used in the assessment environment and in the production of examination items, and there were some very interesting key findings and takeaways.

1. Improved quality and consistency

The more we train and develop our custom GPT, we have been finding that the results it produces have been getting closer and closer to the types of assessment items a human might produce and expect to see as an end user.

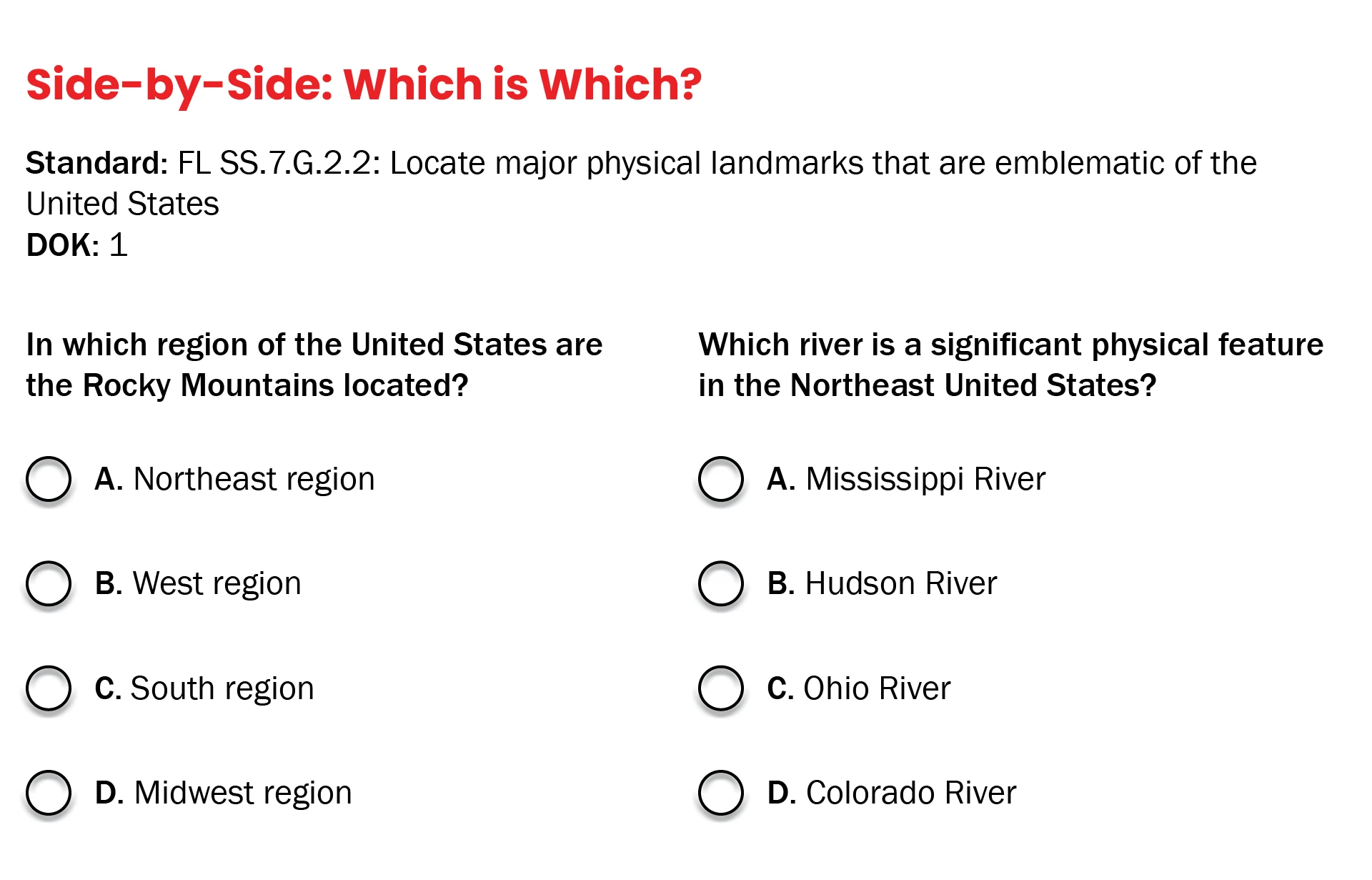

It was particularly telling that when I shared several side-by-side examples of human created items and AI generated versions, the group was unable to distinguish between the two. For example, when presented with the two assessment items below, the group guessed that the item on the left was created by our GPT, whereas it was in fact the item on the right:

2. The law of reciprocity

From the early days of working with generative AI, it has been clear that the quality of what you get out is entirely predicated on the quality of what you put in. The more content-specific prompts, inputs and training we were able to give our GPT—such as human authored passages, lessons and guidelines—the better results it could generate.

The GPT is capable of producing very high-quality, accurate and relevant outputs, but only if you provide detailed passages in the first place, rather than expecting it to deliver quality items out of thin air.

3. A tendency to go long

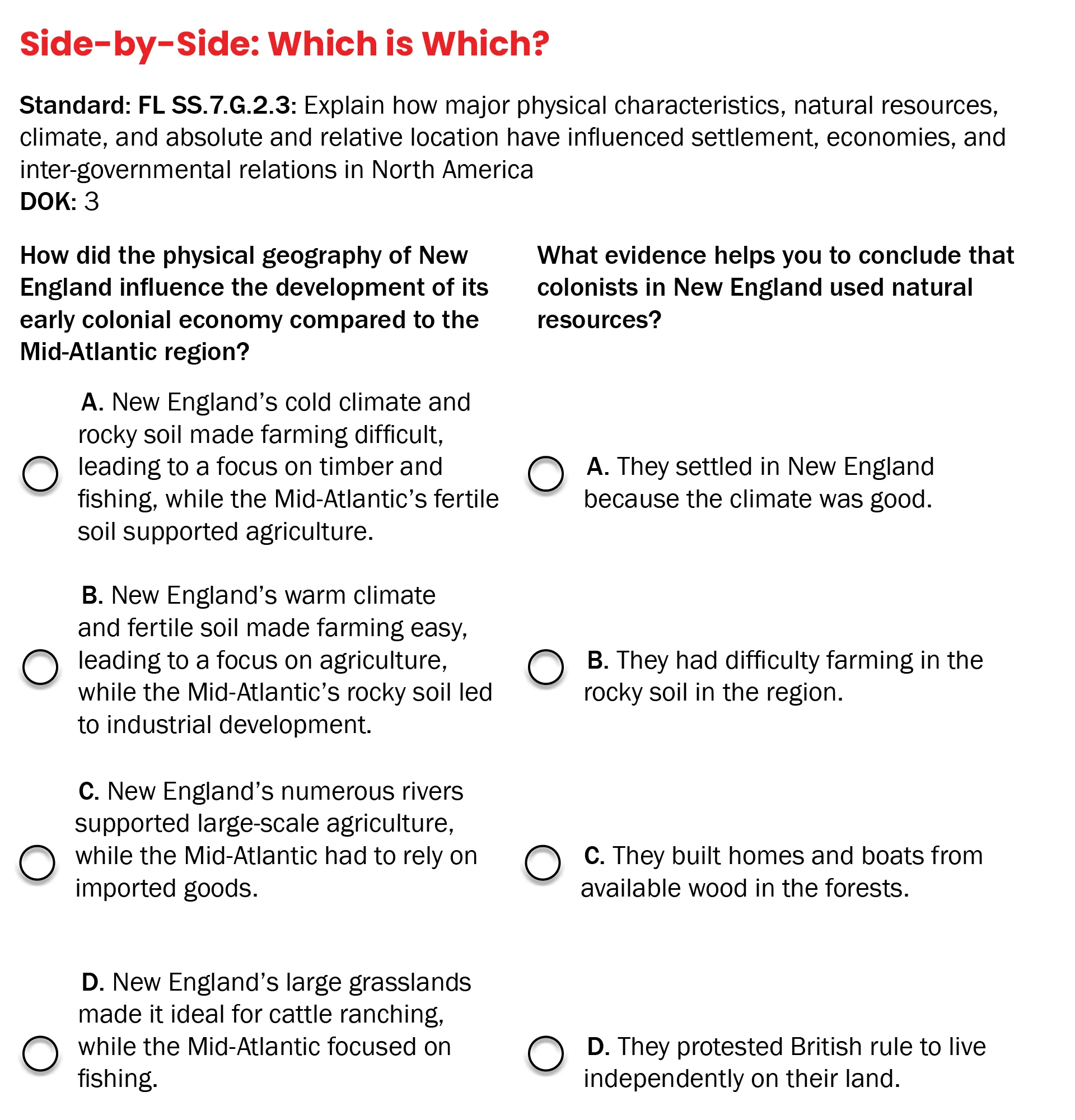

Despite our best efforts, our GPT does still appear to have a natural inclination to produce assessment items that are perhaps longer than necessary. While the example below (left) shows there is no real issue with the quality of the AI-generated assessment item, it does highlight the fact that it hasn’t yet cottoned onto the “less is more” rule that a human assessment professional lives and dies by:

4. The answer is ‘B’

Another interesting anecdote working closely with generative AI in the context of creating assessment items is that we discovered that 95% of the time, the GPT will disproportionately default to option ‘B’ as the correct answer.

While I’m unable to explain this strange quirk, it’s an example that really reinforces Joe Berman’s statement and underscores the importance of human intervention, to constantly check the bot’s homework, to review results— and then review again—polishing up items before they go any further.

Vanessa Vaughn is Senior Director, Content Services in KGL’s K-12 and Higher Education group where she oversees content development for science and humanities subjects. For more information on AI innovations for Increasing the speed and quality of content workflows, visit the KGL Smart Lab.